Course Description

Modern

video games employ a variety of sophisticated algorithms to produce

groundbreaking 3D rendering pushing the visual boundaries and interactive experience

of rich environments. This course brings state-of-the-art and production-proven

rendering techniques for fast, interactive rendering of complex and engaging

virtual worlds of video games.

This

year the course includes speakers from the makers of several innovative game

companies, such as Ubisoft, Sledgehammer Games, Activision, NVIDIA, Unity

Technologies, Lucasfilm, and Epic Games. Topics range from

variations on the latest techniques for modern rendering pipelines,

high-quality depth-of-field post processing, advances in material

representation for deferred rendering pipelines, including improvements in

subsurface scattering and PBR material representations, improvements to diffuse

multi-scattering BRDFs, volumetric rendering techniques, using ray tracing

techniques for accurate soft shadows in real-time, and many more.

This is the course to attend if you are in the game development industry or

want to learn the latest and greatest techniques in real-time rendering domain!

Previous years’ Advances course slides: go here

Syllabus

Advances in Real-Time Rendering in Games: Part I

Monday, 13 August 2018 9am - 12:15pm | East Building, Ballroom

BC, Vancouver Convention Centre

Advances in Real-Time Rendering in Games: Part II

Monday, 13 August 2018 2pm - 5:15pm | East Building, Ballroom

BC, Vancouver Convention Centre

Prerequisites

Working

knowledge of modern real-time graphics APIs like DirectX or Vulkan or Metal and

a solid basis in commonly used graphics algorithms. Familiarity with the

concepts of programmable shading and shading languages. Familiarity with

shipping gaming consoles hardware and software capabilities is a plus but not

required.

Intended Audience

Technical

practitioners and developers of graphics engines for visualization, games, or

effects rendering who are interested in interactive rendering.

Advances in Real-Time Rendering in Games: Part I

9:00 am

Natalya

Tatarchuk

Welcome and introduction

9:10 am

Steve McAuley (Ubisoft)

The challenges of rendering an open world in Far Cry 5

10:10 am

Danny

Chan (Sledgehammer)

Material advances in Call of Duty: WWII

11:10 am

Guillaume

Abadie (Epic Games)

A life of a bokeh

12:10 pm

Closing Q&A

Advances in Real-Time Rendering in Games: Part II

2:00 pm

Natalya Tatarchuk

Welcome (and welcome back!)

2:05 pm

Sebastien

Lagarde (Unity Technologies)

Evgenii Golubev (Unity Technologies)

The road toward unified rendering with Unity’s high

definition rendering pipeline

3:05 pm

Evgenii Golubev (Unity Technologies)

Efficient screen-space subsurface scattering using

Burley’s normalized diffusion in real-time

3:35 pm

Matt

Pharr (NVIDIA)

Real-time rendering’s next frontier: adopting

lessons from offline ray tracing to practical real-time ray tracing pipelines

4:35pm

Stephen

Hill (Lucasfilm) (presenter)

Morgan

McGuire (NVIDIA) (presenter)

Eric Heitz (Unity Technologies) (non-presenting contributor)

Real-time ray tracing of correct* soft shadows (*

without a shadow of a doubt)

5:15 pm

Natalya Tatarchuk

Closing Remarks

Course Organizer

Natalya Tatarchuk (@mirror2mask) is a graphics engineer and a rendering enthusiast at heart. As the VP of

Graphics at Unity Technologies, she is focusing on driving the state-of-the-art

rendering technology and graphics performance for the Unity engine. Previously

she was the Graphics Lead and an Engineering Architect at Bungie, working on

innovative cross-platform rendering engine and game graphics for Bungie’s Destiny franchise, including leading

graphics on the upcoming Destiny 2 title.

Natalya also contributed graphics engineering to the Halo series, such as Halo:

ODST and Halo:Reach. Before moving into game development

full-time, Natalya was a graphics software architect and a lead in the Game

Computing Application Group at AMD Graphics Products Group (Office of the CTO)

where she pushed parallel computing boundaries investigating advanced real-time

graphics techniques. Natalya has been encouraging sharing in the games graphics

community for several decades, largely by organizing a popular series of

courses such as Advances

in Real-time Rendering

and the Open

Problems in Real-Time Rendering at

SIGGRAPH. She has also published papers and articles at various computer

graphics conferences and technical book series, and has presented her work at

graphics and game developer conferences worldwide. Natalya is a member of

multiple industry and hardware advisory boards. She holds an M.S. in Computer

Science from Harvard University with a focus in Computer Graphics and B.A.

degrees in Mathematics and Computer Science from Boston University.

The Challenges of Rendering an Open World in Far Cry 5

Abstract: Open worlds with dynamic

time of day cycles pose a significant challenge to graphics development. There

are fewer hiding places for the weaknesses and edge cases in our rendering

features, as care has to be taken to ensure features work in all scenarios.

This talk discusses some of the challenges faced on Far Cry 5 developing a water system, a physically-based time of day

cycle and closes by looking at some small techniques to improve the lives of

artists.

Bio: Stephen McAuley is a 3D Technical Lead at

Ubisoft Montreal on the Far Cry

Central Tech team, where he holds the vision for the future of the 3D engine. During

his time at Ubisoft, he has worked on many Far

Cry games, spearheading the switch to physically-based lighting and

shading, working towards a more data-driven rendering architecture, and

focusing on visual quality. Previously, he was a graphics programmer at Bizarre

Creations, shipping games such as Blur and Blood Stone.

Materials (Updated:

September 4h 2018): PPTX

(382 MB), PDF

(17 MB)

Material Advances in Call of Duty:

WWII

Abstract: This session will describe a number of improvements that were made to

the Call of Duty:WWII shaders for lit opaque surfaces. The

authors extended the Diffuse BRDF to model Lambertian

microfacets with multi-scattering. They derived a

simple method to mipmap normal and gloss maps, by

directly correlating gloss to average normal length of a distribution,

bypassing an intermediate representation, like variance. Then cavity maps are auto-generated

for every surface that has a normal map, and the

presentation will also show how the authors handled occlusion and indirect

lighting in cavities represented by these textures. The presenters will

demonstrate how the environment split integral precalculation

can be easily reused for energy conserving diffuse. Finally, they will show how

Rational Functions can be a useful tool to approximate many functions that

can't be represented analytically or efficiently.

Bio: Danny Chan is Principal Software Engineer at

Sledgehammer Games, leading the rendering effort on Call of Duty: Modern Warfare 3, Call

of Duty: Advanced Warfare and Call of

Duty: WWII. Previously, he’s worked at Crystal Dynamics, Naughty Dog (as

Lead Programmer on Crash Team Racing),

EA and Namco.

Materials (Updated: February

5, 2019): PPTX (140MB), PDF (4MB), Course Notes PDF (4MB)

A Life of a Bokeh

Abstract: Lens of a physical camera have a depth of

field phenomena that has an importance in cinematography to bring focus on

desired subject of a frame. The challenge of real-time depth of field is to

output the highest bokeh quality while remaining

fast. This talk will journey through the step by step implementation of a depth

of field algorithm starting from existing state-of-the-art, and fixing

artifacts one after the other to converge to the final implementation released

in Unreal Engine 4.20. In order to achieve its opposing goals including

scalability across a large variety of hardware, high quality and performance,

the self-contained algorithm blends between:

-

The fast performance of scatter-as-gather approach while efficiently

solving a physically plausible geometric occlusion with large variety of

blurring radii for background, and hole filling for the foreground;

-

The quality of scattered sprites for highlights and deals with all the

complexity implication of combining them plausibly;

-

The details of sub-pixel accuracy of slight out of focus convolution

with the challenges of running with a Temporal Anti-Aliasing from older but

sadly never published algorithm used in Unreal Engine 4.

Bio: Guillaume Abadie is Graphic Programmer at Epic Games, working directly on Unreal Engine

4's renderer, more specifically on post processing. Notably, he implemented

temporal up-sampling & dynamic resolution duo shipping on Fortnite Battle Royal running at 60Hz on

consoles. He also built the compositing of the SIGGRAPH real-time live 2017

awarded The Human Race demo. (twitter: @GuillaumeAbadie).

Materials (Updated:

August 24th 2018): PPTX (150MB)

The Road toward Unified Rendering with Unity’s High Definition Render

Pipeline

Abstract: When designing a rendering engine

architecture, one frequently must choose whether to implement a forward or a

deferred renderer, as each choice presents an important number of design

decisions for material and lighting pipelines. Each of the approaches

(forward-, forward-plus, or deferred) has a number of strengths and

deficiencies, widely covered in previous conference presentations from shipping

games’ engines. The features offered by each rendering architecture varies

widely, and often can be content-centric.

When designing the high-definition rendering

pipeline (HDRP) for the Unity engine, the authors desired to leverage the

strengths of each rendering approach, as necessary for various application

contexts (a console game, VR application, etc.). Thus, an important design

constraint for the architecture of HDRP became a unified feature set between

the deferred and forward rendering paths.

This presentation will explain how the team

tackled designing the lighting, material and rendering architecture for the

HDRP with the feature parity design constraint as one of the main pillars. It

will cover the details of the flexible G-buffer layout architecture, explain

the logic behind the taken design choices, and necessary optimizations for

efficient execution on modern console hardware. In addition, the authors will

present advanced developments made in the field of physically-based rendering,

material advances, focusing on the novel BRDF model used for the rendering

pipeline. The talk will also provide a framework for correctly mixing normals with complex material for evaluation at runtime.

Lastly, the architecture and technical details of the physically-based

volumetric lighting approach will be described, along with the necessary

optimizations for fast performance.

Bios: Sébastien Lagarde is a lead graphics programmer at Unity

Technologies where he's driving the architecture of Unity's high definition

rendering pipeline, amongst other projects. Prior to Unity, he has worked on

many game consoles for variety of titles, from small casual games to AAA (Remember Me, Mirror's Edge 2, StarWars Battlefront, among some). Sébastien has

previously worked for Neko Entertainment, Darkworks, Trioviz, Dontnod and Electronic Arts / Frostbite.

Evgenii Golubev, Graphics Programmer in the Unity’s Paris

Graphics team, where he is contributing to Unity’s high definition rendering

pipeline architecture, focusing on physically-based materials and advanced

light transport, making them run efficiently on modern hardware. Previously

worked on the rendering technology at Havok and Microsoft.

Materials (Updated:

August 25th 2018): PDF (14 MB), Book of the Dead HD RP Video,

Automotive HD RP Video,

Volumetrics

in HD RP Video (Slide 134), HD

RP Participating Media Authoring Video (Slide 137), Spotlight

with highly forward-scattering fog GIF (Slide 140)

Efficient Screen-Space Subsurface

Scattering Using Burley’s Normalized Diffusion in Real-Time

Abstract: Screen-space subsurface scattering

by Jimenez et al and the multitude of parameterization options developed for

it, such as Gaussian lobe, two Gaussian lobe, SVD, etc.,have

produced impressive results in rendering organic materials and characters.

However, these methods focused primarily on the multi-scattering contribution

rather than the single scattering contribution (aside from simple

approximations via an extra weight for the original diffuse texture.) These

previously introduced techniques rely on a separable Gaussian filter to achieve

fast performance on modern GPUs.

In this talk, the authors build on

the recently introduced Burley normalized diffusion method, bringing this

technique developed for offline rendering pipelines into real-time with a novel

screen-space subsurface scattering method. This method accounts for both multi

and single scattering appearance models. Its parameterization model is simple

and is derived based on the values from real world measurements. The authors

will compare the quality of this approach to the more commonly used

screen-space subsurface scattering techniques and explain the necessary

optimizations required to evaluate its non-separable kernel efficiently in

real-time. This method is used in production and is highly performant on

commodity console hardware, such as Playstation 4 and

Xbox One at 1080p resolution.

Bio: Evgenii Golubev, Graphics Programmer in the Unity’s Paris

Graphics team, where he is contributing to Unity’s high definition rendering

pipeline architecture, focusing on physically-based materials and advanced

light transport, making them run efficiently on modern hardware. Previously

worked on the rendering technology at Havok and Microsoft.

Materials (Updated:

August 25th 2018): PDF

(5MB)

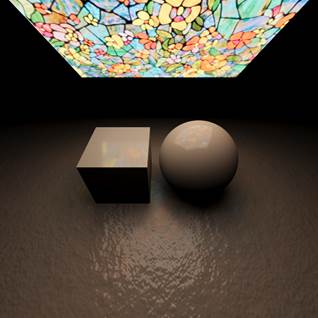

Real-Time rendering’s next frontier: Adopting lessons from offline ray

tracing to real-time ray tracing for practical pipelines

Abstract: Ray-tracing has finally

arrived to the real-time graphics pipeline, tightly integrated with

rasterization, shading, and compute. The

ability to trace rays offers the promise of finally being able to accurately

simulate global light scattering in real time graphics. Just as physically-based materials have

revolutionized real-time graphics in recent years, physically-based global

lighting offers the chance to have a similar impact on both image quality and

developer and artist productivity.

Getting there won't be easy,

however: currently, only a handful of rays can be traced at each pixel, which

requires great care and creativity from the graphics programmer. There are lessons to be learned from offline

rendering, which broadly adopted ray tracing ten years ago. The author will discuss the experience of the

offline pipelines with the technology and highlight a variety of key

innovations that have been developed in that realm that are worth knowing about

for developers adopting real-time ray tracing.

Bio:

Matt Pharr is a Distinguished Research

Scientist at NVIDIA where he works on ray-tracing and real-time rendering. He is the author of the book Physically Based

Rendering for which he and the co-authors were awarded a Scientific and

Technical Academy Award in 2014 for the book's impact on the film industry.

Materials (Updated:

August 24th 2018): PDF

(74 MB)

Real-Time Ray Tracing of Correct* Soft Shadows

(* without a shadow of a

doubt)

Abstract: With recent advances in real-time shading techniques, we can now light

physically based materials with complex area light sources. Alas, one critical

challenge remains: accurate area-light shadowing. The arrival of DXR opens the

door to solving this problem via ray tracing, but the correct formulation isn't

obvious and there are several potential pitfalls. For instance, the most

popular strategy consists of tracing rays to points randomly distributed on the

light source and averaging the visibility, but we will show that this is

incorrect and produces visual artifacts. Instead, we propose a definition for

soft shadows that allows us to compute the correct result, along with an

efficient implementation that works with existing analytic area lighting

solutions.

Note:

this talk is an extended presentation of the paper Combining Analytic Direct

Illumination and Stochastic Shadows (presented at I3D) that includes additional

practical details.

Speakers: Stephen Hill (Lucasfilm) and Morgan McGuire (NVIDIA)

Non-speaking

contributor: Eric Heitz (Unity Technologies)

Bios: Stephen Hill is a Senior Rendering Engineer within Lucasfilm’s Advanced Development group, where he is engaged

in physically based rendering R&D for real-time productions such as the Carne y Arena VR installation

experience.

Morgan McGuire is a scientist at NVIDIA, holds

faculty appointments at the University of Waterloo, Williams College, and

McGill University, and has co-authored several books, including The Graphics Codex and Computer Graphics: Principles and Practice.

Eric Heitz is a Research Scientist at

Unity Technologies. His research focuses on physically based rendering

including materials, lighting, sampling, LoDs,

anti-aliasing, etc.

Materials (Updated:

August 27th 2018): PDF

(78MB), Video

|

Contact: |

|